1. Introduction

2. History and Overview about Artificial Neural Network

3. Single neural network

7. References

2. History and Overview about Artificial Neural Network

3. Single neural network

- 3.1 Perceptron

- 3.1.1 The Unit Step function

- 3.1.2 The Perceptron rules

- 3.1.3 The bias term

- 3.1.4 Implement Perceptron in Python

- 3.2 Adaptive Linear Neurons

- 3.2.1 Gradient Descent rule (Delta rule)

- 3.2.2 Learning rate in Gradient Descent

- 3.2.3 Implement Adaline in Python to classify Iris data

- 3.2.4 Learning via types of Gradient Descent

- 3.3 Problems with Perceptron (AI Winter)

- 4.1 Overview about Multi-layer Neural Network

- 4.2 Forward Propagation

- 4.3 Cost function

- 4.4 Backpropagation

- 4.5 Implement simple Multi-layer Neural Network to solve the problem of Perceptron

- 4.6 Some optional techniques for Multi-layer Neural Network Optimization

- 4.7 Multi-layer Neural Network for binary/multi classification

- 5.1 Overview about MNIST data

- 5.2 Implement Multi-layer Neural Network

- 5.3 Debugging Neural Network with Gradient Descent Checking

7. References

Multi-layer Neural Network

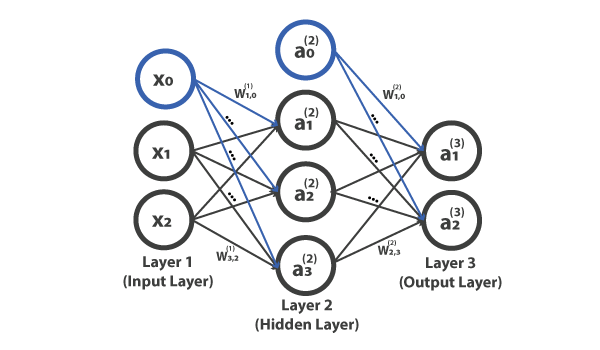

We went through many kinds of stuff about Single Neural Network, hopefully, it makes sense to you, we also already discussed generally Multi-layer Neural Network in the sub-section 2.2.2. In this section, we'll go more detail about this model to discover why Multi-layer Neural Network can solve the problem of Perceptron, so after this section you'll get deeper understanding, prepare for the final part where we actually install real Multi-layer Neural Network into real world problem, use it to classify handwritten MNIST data . So, firstly look at the image below,Let's briefly reviews some the concepts, as we knew, Multi-layer Neural Network has 3 layers,

- Input layer (Layer 1) used to receive information so-called features or variables from data, the number of neurons in input layer belongs to the number of features you want to feed in plus 1 bias unit.

- Hidden layer (Layer 2) is considered to process information from input layer, we can have more than one layers with many neurons in each layer in Hidden layer (called Deep Neural Networks and it's out of scope of this papers).

- Output layer (Layer 3) used to return the output, similarly, the number of neurons in output layer belong to what are outputs you want. Example, if your Neural Networks used for binary classification, output layer has just two neurons represented 0 or 1, and we already implemented Neural Networks for binary classification in the previous section, in the next section we will install Multi-layer for multi-classification.

- Activating Multi-layer Neural Network by forwarding propagation, it means we feed the network starting from input layer, information go from left to right to generate the output.

- Based on the output, we calculate the error, using it to minimize the cost function we will discuss later.

- Update the weights using back-propagation, we discuss very detail about it later, too.

No comments :

Post a Comment

Leave a Comment...