1. Introduction

2. History and Overview about Artificial Neural Network

3. Single neural network

7. References

2. History and Overview about Artificial Neural Network

3. Single neural network

- 3.1 Perceptron

- 3.1.1 The Unit Step function

- 3.1.2 The Perceptron rules

- 3.1.3 The bias term

- 3.1.4 Implement Perceptron in Python

- 3.2 Adaptive Linear Neurons

- 3.2.1 Gradient Descent rule (Delta rule)

- 3.2.2 Learning rate in Gradient Descent

- 3.2.3 Implement Adaline in Python to classify Iris data

- 3.2.4 Learning via types of Gradient Descent

- 3.3 Problems with Perceptron (AI Winter)

- 4.1 Overview about Multi-layer Neural Network

- 4.2 Forward Propagation

- 4.3 Cost function

- 4.4 Backpropagation

- 4.5 Implement simple Multi-layer Neural Network to solve the problem of Perceptron

- 4.6 Some optional techniques for Multi-layer Neural Network Optimization

- 4.7 Multi-layer Neural Network for binary/multi classification

- 5.1 Overview about MNIST data

- 5.2 Implement Multi-layer Neural Network

- 5.3 Debugging Neural Network with Gradient Descent Checking

7. References

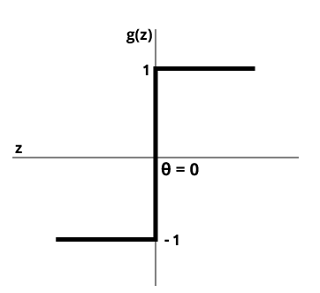

Unit Step function

Before going to deeper about Unit step function, let take a look the perceptron's image above where Unit step function used to make activation function, there are many activation functions we will also discuss later. So, why we call it activation function? As we mentioned before, the idea behind Perceptron is that try to mimic the brain neuron, its mean that when the net input \(z\) if \(g(z)\) reached to the predefined threshold \(\theta\) it "fire" the output value 1, otherwise its return -1.Okay, it will be clear if we define \(z\), \(g(z)\), right? So, look at image above, where \(z\) is the net input and calculated by the net input function and basically, it just a linear combination of input and weight with the formula:

For simplicity, we assume \(\theta = 0\), so the activation function \(g(z)\), where:

No comments :

Post a Comment

Leave a Comment...